I’ve not yet had time for reading the full article but the topic is really interesting. This is for sure one great and good application of deep learning. Anyone with some insights on this topic?

At first glance this doesn’t seem to be as impactful as you might think…

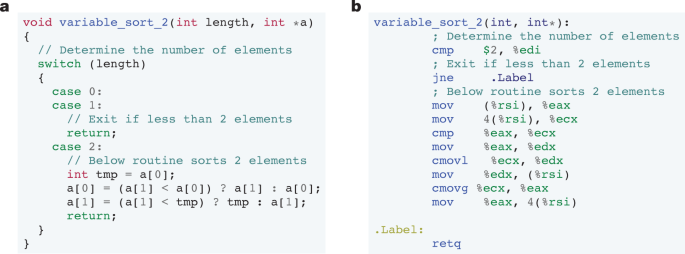

We reverse engineered the low-level assembly sorting algorithms discovered by AlphaDev for sort 3, sort 4 and sort 5 to C++ and discovered that our sort implementations led to improvements of up to 70% for sequences of a length of five and roughly 1.7% for sequences exceeding 250,000 elements.

Wouldn’t this still be pretty massive at large scales like datacenters?

Yes, I’m saying this is actually more important than you might initially think.

deleted by creator

I gave it a quick skim. It seems the improvements are on specific sort tasks. There may also be fitting to the distribution of sequences. It won’t beat n log(n) for all cases, but it might do better for common common situations in the data set.

It’s still neat they made it work.

My first thoughts were on the same line. I think the new “algorithm” are heuristics for small values of n.

Sorry, I’m now seeing this was already posted in this community a few days ago :(