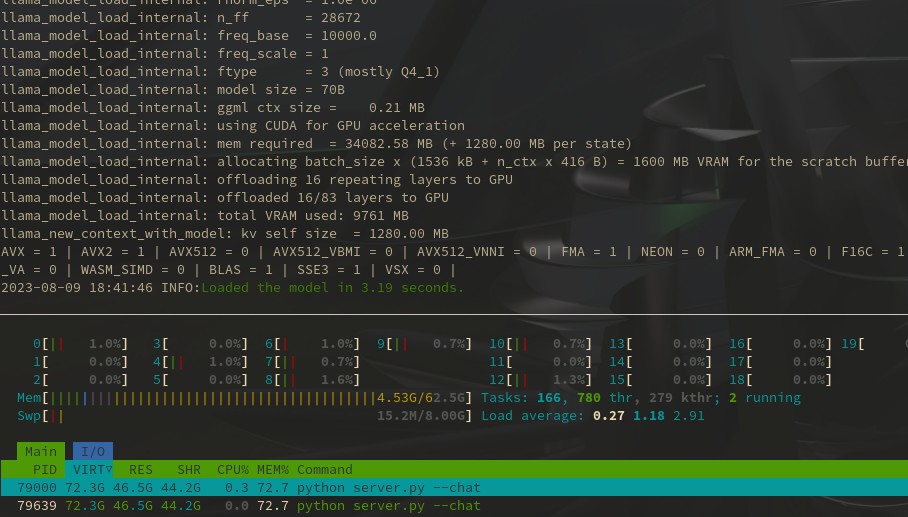

So maybe you’ve seen something like this:

Well here are some screenshots from a YT video I found interesting on the subject (video linked at the end). In a nutshell, AVX 512 is an instruction set architecture designed for 512 bit wide instructions. This rabbit hole will maybe help you understand why CPUs are so much slower than a GPU, or at least, if you already know the basics about computing architecture, this will show where the real bottleneck is located.

https://www.youtube.com/watch?v=bskEGP0r3hE

I’m really curious how well various AVX 512 architectures improve CPU performance. Obviously it was worth implementing a lot of these instructions into llama.cpp, so someone felt it was important. In my experience there is no replacement for running a large model. I use a Llama2 70B daily. I can’t add more system memory beyond the 64GB I have. I need to look into the potential to use a swap partition for even larger models, but I haven’t tried this yet. Looking at accessible hardware to use with AI, the lowest cost path to even larger models seems to be a second hand server/workstation with 256-512GB of system memory, as many cores as possible, and whatever the best implementation of AVX512 accessible for a good price then add a 24GBV consumer GPU to this. That could still be less than $3K and on paper it might run a 180B model, and still be cheaper than just a single enterprise 48GBV GPU. Maybe someone here has actual experience with this and how various chipsets handle the load in practice. It is just a curiosity I’ve been thinking about.

Hardware moves notoriously slow, so I imagine we still have several years before a good solution manifests in the market.

Somebody needs to build a good Asimov character roleplay and coax the secret for the positronic brain out of him. I’d like to buy the new AMD R-Daneel Olivaw 5000 please. Hell, I’ll settle for a RB-34 Herbie model right now.