My main account is dragontamer@lemmy.world. It has turned out that a 2nd account on the federation is a good idea. I’ll use this dragontamer@lemmy.ca account when lemmy.world gets DDOSed (or other such events), allowing me to continue posting and moderating even if my main account goes down.

- 7 Posts

- 12 Comments

2·10 months ago

2·10 months agoI hate this setup.

Rasp. Pi Pico W has a Wi-Fi card. Cool. The WiFi connects to your local WiFi network, which connects to your ISP, which connects to the internet, which connects to ntfy.sh. NTFY.sh then creates a push notification over Apple and/or Android, then finds your Phone, likely realizing your phone is currently in your WiFi network. It then sends a message to your ISP, to send a message to your WiFi network, which sends a message to your Phone and you finally get the alert.

Its probably the easiest way to get things done, but a bluetooth alert and/or Wifi-direct alert of some kind would be better. Phone APIs don’t seem too keen on WiFi / Socket servers however. Bluetooth would likely be a better solution, but involves pairing and other such noise to complicate the process.

Hmmmmm. I see that ntfy.sh is the easiest way to bridge it all together. But that doesn’t mean I have to like it.

1·10 months ago

1·10 months agoOld news.

https://www.cnx-software.com/2019/02/21/stmicro-stm32mp1-cortex-a7-m4-mpu/

This happened in 2019. STM32MP2 release is coming soon, so we’re well on our way to having the 2nd generation of STM32-microprocessors.

I’m mostly a MCU fan. MPUs are awesome too, though the gross complexity increase makes my head spin. Supporting “proper” DDR2 and 512MBs sounds awesome though, my personal VPS for years has always been just a 512MB instance because that’s enough for personal websites and simple Linux tasks.

512MB DDR2 on a single-core ARM A7 is exciting to me. But… in a way that feels forbidden, too complex for me to truly get into and try to use. I’d probably just buy things like Beaglebone Black ($50 at the moment), or Rasp. Pi (if it ever comes back to a good price).

2·10 months ago

2·10 months agoThat didn’t fit with his limiting how many tweets users are able to view.

The theory behind that is that Twitter failed to pay for their web-services and needed to suddenly cut traffic, otherwise they’d be shutdown by Amazon / Google.

After Twitter paid Amazon/Google, they raised the tweet view-limits appropriately, but the damage was already done.

2·10 months ago

2·10 months agoWashington Post confirms the story https://www.washingtonpost.com/technology/2023/08/15/twitter-x-links-delayed/

Yeah, cause the Win32 API + DirectX is more stable than the rest of Linux.

There’s a reason why Steam games prefer to emulate Win32 API on Linux, rather than compiling to Linux binary native. Wine is more stable than almost everything else, and Windows’s behavior (both documented, and undocumented) has legendary-levels of compatibility.

You know that doesn’t matter when commercial software often only releases and tests their software on Ubuntu and RedHat, right?

I run Ubuntu / Red Hat / etc. etc. because I’m forced to. Do you think I’m creating a lab with a billion different versions of Linux for fun?

Linux kinda-sorta works if you’ve got the freedom to “./configure && make && make install”, recreating binaries and recompiling often. Many pieces of software are designed to work across library changes (but have the compiler/linker fix up minor issues).

But once you start having proprietary binaries moving around (and you’ll be facing proprietary binaries cause no office will give you their source code), you start having version-specific issues. The Linux-community just doesn’t care very much about binary-compatibility, and they’ll never care about it because they’re anti-corporate and don’t want to offer good support to binary code like this. (And prefers to force GPL down your throats).

There’s certainly some advantages and disadvantages to Linux’s choice here (or really, Ubuntu / Red Hat / etc. etc. since each different distro really is its own OS). But in the corporate office world, Linux is a very poor performer in practice.

like saying you are running last night’s upload

If only. I’m running old stuff, not by choice either.

Ubuntu 18.04 and up literally fails to install on one of my work computers. I’ve been forced to run Ubuntu 16.04. BIOS-incompatibility / hardware issues happen man. It forces me to an older version. On some Dell workstations I’ve bought for my org, Ubuntu 22 fails to install and we’re forced to run Ubuntu 20.04 on those.

Software compiled on Ubuntu 16.04 has issues running on Ubuntu 20.04, meaning these two separate computers have different sets of bugs as our developers run and test.

I’m running old LTS Ubuntu instances, not because I want to mind you. But because I’ve been forced with hardware incompatibility bugs to do so. At least we have Docker, so the guy running Ubuntu 20.04 can install docker and create an Ubuntu 16.04 docker to run the 16.04 binaries. But its not as seemless as any Linux guy thinks.

CentOS is too stable and a lot of proprietary code is designed for Ubuntu instead. So while CentOS is stable, you get subtle bugs when you try to force Ubuntu binaries onto it. If your group uses CentOS / RedHat, that’s great. Except its not the most popular system, so you end up having to find Ubuntu boxes somewhere to run things effectively.

There’s plenty of Linux software these days that forces me (and users around me) to use Linux in an office environment. But if you’re running multiple Linux boxes (This box is Ubuntu, that one is Ubuntu 16 and can’t upgrade, that other box is Red Hat for the .yum packages…), running an additional Windows box ain’t a bad idea anyway. You already were like 4 or 5 computers to have this user get their job done.

Once you start dealing with these issues, you really begin to appreciate Windows’s like 30+ years of binary compatibility.

1·11 months ago

1·11 months agoAdditional info: Use https://lemmy-status.org/ to see https://lemmy.world status

1·11 months ago

1·11 months agoOngoing DDOS attack right now. Testing federation post with my alt-account. Lemmy.world is down as of this post, but I’m still able to get to Lemmy.ca as expected.

1·11 months ago

1·11 months agoTest post for my alt to be promoted to moderator.

1·11 months ago

1·11 months agoPosting here with my secondary account.

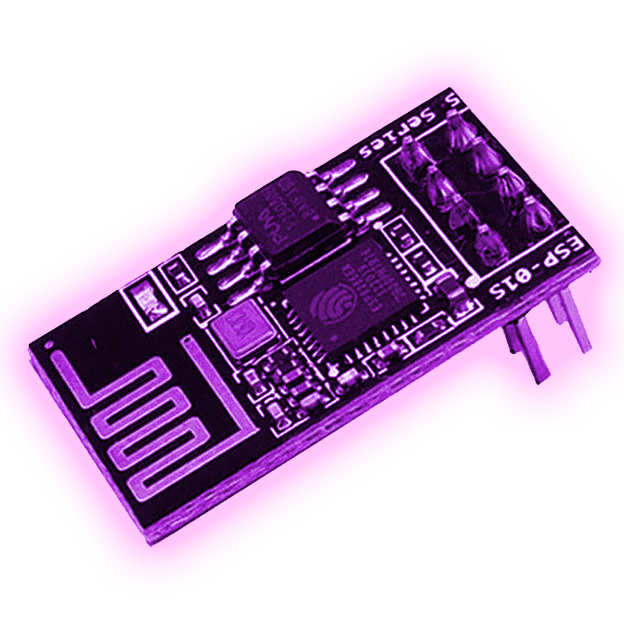

I realize this story is about the ESP32-S3, but the OTHER board discussed in that article is way more intriguing to me.

Wait, wut? They’ve got an i.MX unit? Well now I’m super intrigued. I’ve posted about the difference between Microcontrollers and Microprocessors in my guides. But the i.MX series from NXP tries to toe the line inbetween the two categories. A Cortex M7 though is still solidly “Microcontroller” to me, but any i.MX will intrigue me because its specs are incredibly high for a uC (or incredibly low-power for a uP).

With regards to Arduino form factor… the size roughly compares to 3x AA NiMH cells or larger 18650 Li-ion cells. Its a good size for portable applications, though a bit larger than something you’d reliably put inside a pocket (big pockets can probably fit Arduino form factor + batteries though).

Adafruit themselves seem to be most interested in the Cosplay-electronics community, so something like this would be a wearable that you’d hide in your costume somewhere. (Ex: controlling the lights to an Overwatch costume or other “techie” video game costume that needs a lot of lights, fiber optics, and the like).

In any case: that’s three boards with three different sets of capabilities: from the lowly RP2040, to ESP32-S3, to i.MX Cortex M7 “crossover” chip.