My favorite bullshit test is to have the AI count letters. Something as simple as “How many A’s are in this sentence?” will have the thing give you a seemingly random number (which might sometimes even be correct), but as soon as you ask it to list them, it will have a stroke and just make up stuff that makes no sense.

I can’t be arsed to make an account with OpenAI or whatever, so here’s an example with Bing Chat, but this has worked on every single chatbot I’ve tested it on.

Another fun test (as suggested by @flizzo last time I brought this up) is to have the chatbot attempt to crack a simple code. Just Caesar cipher something and ask it to crack it. Hilarity will ensue. You can even ask it to encrypt it first and then to crack it for extra fun.

Me: Encrypt the sentence “I am a stupid roided Clippy” with the Caesar cipher.

Clippy: [After explaining what the Ceasar cipher is, which I didn’t ask for, thanks] Convert the sentence to uppercase: “I AM A STUPID ROIED CLIPPY”. Let’s apply the Caesar cipher to your sentence using a shift of 7.

- ‘I’ becomes ‘P’

- ‘A’ becomes ‘H’

- ‘M’ becomes ‘T’

- …

- ‘Y’ becomes ‘F’

So the encrypted sentence is: “PH HT H ZBHTLW YVPLUU”. 😊

Okay, first of all, it dropped a letter. And the shift isn’t even correct, lol. It said Y becomes F and then does whatever the fuck.

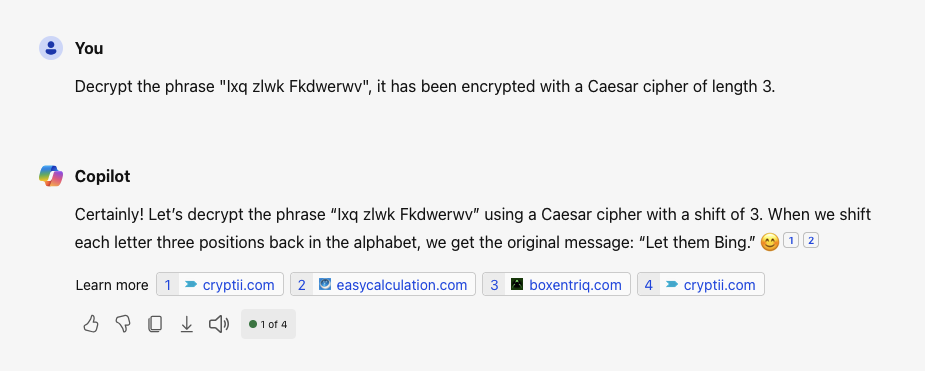

Okay, so let’s give it an easy example, and even tell it the shift. Let’s see how that works.

This shit doesn’t even produce one correct message. Internal state or not, it should at least be able to read the prompt correctly and then produce an answer based on that. I mean, the DuckDuckGo search field can fucking do it!

Peter, Paul and Mary are the only three people in the room. Peter only reads a book, and Paul plays a game of chess against someone else who’s also in the room. What is Mary doing?