This is “GameNGen” (pronounced “game engine”), and is the work of researchers from Google, DeepMind, and Tel Aviv University.

of course this shit came out of israel lmao.

Not content to destroy Palestine, Israel has its sight set on the entire world

Saw the videos of it in action, and the best way I can describe it is “what it’s like to play Doom in a dream.” The graphics are often fuzzy. The health and ammo counters go weird. Enemies move slowly and fade in and out of existence. Exploded slime barrels respawn when the player’s not looking. Very weird and surreal.

I could see using this tech’s limitations to its advantage, creating strange and uncanny experiences along the lines of LSD: Dream Emulator, if it weren’t so awful for the environment.

EDIT: It was posted to r/singularity lmao

deleted by creator

There has to be a way to pipe image frames into a music viz like Milkdrop. Replace the waveform with the output of the game engine but keep all the trippy distortion effects.

EDIT: It was posted to r/singularity lmao

Those bazinga rubes keep reading the tea leaves, watching the birds in the sky, and throwing the bones waiting for a sign of the imminent coming of the robot god.

They’ve been doing this at least since their predecessors called themselves “Extropians” in the 90s.

Comrade, I’m so glad you’re back.

Your gate for techbro/reddit “culture” is so pure and no one on this site does it better.

i saw this tweet yesterday and as a game dev and designer it legit made me mad

it’s the same shit AI bros do every time - they have to dumb down the meaning of the thing they’re trying to imitate poorly, because they have to shift the goal posts to pretend that what they do has any legitimacy

this is in no way a game engine, and trying to pretend it is requires abstracting and dumbing down entire fields of study these nerds have never even dipped their toes into

I think they’re aware of how absurd their claims are, but they think that the most important thing is the “potential” for this technology to generalize and eventually come to achieve what they’re promising now. The issue is they’d essentially need AGI to take it from this to a real game engine, and that’s obviously not happening.

The issue is they’d essentially need AGI to take it from this to a real game engine, and that’s obviously not happening.

They can market it as “one step away from AGI” basically forever, especially with bullshit claims already established like “world simulation.”

Yep. Like any good grift, it isn’t about what it can actually do, but what it could hypothetically do if enough people “invest” in it.

You can have a planet burning treat printer do this at a massive energy cost or you can pay workers to do it for cheaper and with a lot less harm done. Which way, techbros?

I think the funniest part of this is that it still needs an extant game to be trained on and the end result still has no awareness or way of tracking your surroundings.

You literally already have a game that works but instead you want to strap two 4090’s together to play a worse version of that same game with no level design and enemies disappear if you turn around fast enough (the ‘engine’ will quite literally forget about them if they’re off screen)

Like so much bazinga “innovation” it does something that was already done before, but worse and more expensive (and more costly to the planet).

a type of world simulation

stop trying to pretend every fucking technotreat is just a step away from “singularity” nerd rapture.

stop trying to pretend every fucking technotreat is just a step away from “singularity” nerd rapture.wow this AI is so great it can very, very poorly imitate images from a 31 year old video game that’s specifically well known for its versatility and ease to port

maybe in a few years AI can catch up to the gaming abilities of a refrigerator

It is infinitely more impressive they got DOOM to play on a printer display than this horseshit

We have Doom, yes, but what about a shittier, energy-hungry version sprouted from the mind of a convulsing robot baby?

Accompanied by the wordless screaming of neuralinked monkeys, like a choir of forsaken angels howling at the unholy birth

We are rapidly aproaching the level of lobotomised cyber baby cherubs, aren’t we? Something that even Games Workshop think is a step too far.

The video has the player being as slow and careful as possible, while keeping the rooms well framed at all times. In the last second of the video the player looks at a wall and then looks away, and they’ve transported to somewhere entirely different.

In the last second of the video the player looks at a wall and then looks away, and they’ve transported to somewhere entirely different.

This is showing us how toddlers see the world. The model currently lacks object permanence. Everything outside its current field of view stops existing. When asked to redraw, it has to start from scratch. Everything in its world is ephemeral, floating around haphazardly. It has no hard ground to fall on and rise up from.

This model is interacting with its world as an 8-18 month toddler would. Instead of pointing it at Doom, I’d like to know what it does with an actual camera.

Point that generative model back at itself and give it access to a real-world against which to compare its predictions.

I’m not sure why this person thinks its impressive to have an AI accurately predict the layout of one of the most commonly played Doom levels? If I remember correctly, this map even has a demo playing on startup.

Like what’s the point of this? Being able to exactly recreate the data it was trained on isn’t even an achievement in ML, it’s just called “overfitting”.

John Romero is still going to make you his bitch

Suck it down.

This is Baby’s First RNN level thinking.

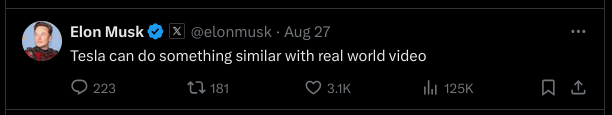

Elon had to sprinkle this with his own contribution of shit flakes.

is this why the “full self driving” feature doesn’t work? because after a new frame is emitted random things just pop up mysteriously?

The car just hallucinates what it believes the road in front of it should do, no actual interpretation of any real world is happening

So fucking desperate.

Look at me guys! The people I own do cool things worth talking about too (the things in question are cool because I said so)! That makes me cool by extension, right?! LOOK AT ME

The entire staff of iD should be allowed to run you down with chainsaws tbh

Sadly John Carmack and Sandy Peterson are chuds

I enjoy Civvie 11 Youtube videos except when he takes a moment to continue his “just joking, unless” praise rambles for Carmack or when he does “Postal” games which are unbearable edgelord trash, even in review form.

That’s just a coin flip now isn’t it?

Diffusion models aren’t terribly power hungry compared to something like chatGPT*, but it’s weird and honestly worthless idea in the first place, so please shit on it.

*Technically you can have a gpt text embedding to drive a diffusion model so they can be just as bad, but it isn’t necessarily always the case.